AMMs are not in equilibrium. While LPs for the top Uniswap pools have become profitable, returns within a rounding error of zero. Most people in DeFi, even those building AMMs, do not understand convexity costs, but as everyone figures it out, TVL will continue to stagnate, if not decline. Worse, many yield farming scams are predicated on the underlying yields from LPing, and if this is built on a base zero-return at best, no amount of leverage can make it generate an attractive return.

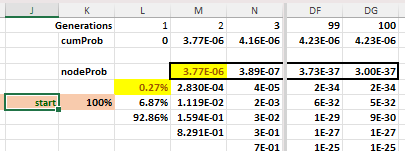

2023 and 2024 YTD Profit/USD Traded (in bps)

For example, the eth-usdc pool lost 0.000048 USD per USD traded in 2023 and has made 0.000071 USD per USD traded through 5/5/2024.

Perps to the Rescue?

In TradFi, futures volume dominates the spot, but in DeFi, it's the opposite. Many think decentralized perp trading will soon grow to reflect their natural dominance, but that requires them to become attractive to big players, whales. Crypto entrepreneurs are great for pumping meme coins, but they are incompetent at creating robust, sustainable markets in assets that will get us through the inevitable fiat devaluation that will accompany the next financial crisis.

One problem in attracting whales is regulation. While the US has been making it easier for hedge funds to trade crypto, they are currently restricted to centralized exchanges like Coinbase and the Chicago Mercantile Exchange. Allowing US hedge funds to trade on the blockchain will take several years, as currently, regulators do not even comprehend how wallets like MetaMask work.

Outside the regulatory hurdle, anyone with $10 million can not trust a dex perp because they are non-recourse, often pseudonymously administered, and lack integrity.

To the degree they are low-latency limit order books, they provide strong incentives towards covert preferential treatment.

Staking tokens and rewards programs offered by perp protocols highlight insiders are stupid or comfortable with a Ponzi

Only an insider conspiracy explains an average funding rate that is too high on average and positively correlated with recent price movements.

Insiders are comfortable lying, which makes them untrustworthy

The official Bitmex perp funding rate mechanism has and will never work as promoted.

Referencing the academic work of Gehr and Shiller is a deliberately misleading

Who Gets Colocation?

In the 1990s, the rise of the internet spawned electronic trading via exchanges like Archipelago and Island. This replaced the old system where white-listed Nasdaq market makers or a monopoly specialist would handle equity orders. As this new system was open, the market makers competed on speed, as the fastest would dominate. This lead to exchanges giving high-frequency trading firms colocation services, where market makers could put servers in the same building as the exchange servers. Many claim this is unfair, but it’s the fairest thing imaginable. Your average day trader cannot compete with HFTs without colocation, so the only effect is to make the competition among the elite open and equal. The alternative would be to give the advantage to those with covert agreements with exchange insiders, or to those who knew the owner of the building next door to exchange. The monetary incentive was significant, so colocation was fairer because traders would do whatever it took to get the shortest and fastest connection to the exchanges.

Many faux-dex perps use limit order books (LOBs) instead of AMMs, and LOBs require low latency and high bandwidth so that LPs can quickly and costlessly cancel and replace their resting limit orders. For example, DyDx and Hyperliquid have their private blockchains, which can reduce block times to under a second. This is only possible via effective centralization. However, these perp exchanges must aspire to decentralization to be credible, as FTX highlighted that centralized exchanges are risky regardless of their official mission statement. The global messaging and consensus mechanisms in any potentially decentralizable blockchain preclude centralized servers or officially white-listed colocation services.

Nonetheless, the advantage of having the quickest connection to these exchanges is the same as TradFi. Insiders at DyDx, etc., can make a lot of money telling preferred traders how to have top-tier access to their network. With hundreds of milliseconds to work with, there is a large window to provide insiders with a decisive advantage. These exchanges have no independent third-party auditing their messages or trading tape, so there is no downside. One must trust that no one in these organizations is susceptible to bribery.

Staking and Rewards

The earlier version of perp player DyDx offered a rewards program where users could profit by wash trading. When the rewards program ended, the volume and TVL dropped by 90%. This has happened often because it works (see here and here). Fake volume fools outsiders into thinking there is real demand, which pushes up the perp protocol’s token price, enabling insiders to cash out with big gains or a big VC investment (initial FTX volume was $300MM/day, though there were no mentions of it circa June 2019).

Another common crypto trick is to offer users extra rewards for locking up their tokens, often for a year. The exchanges would not even pay them with outside tokens; they would just inflate their own token by giving these stakeholders extra rewards. This is a simple Ponzi that works well until it doesn't. Staking makes sense for PoS blockchains like Ethereum, as they provide a useful service. The staker offers their collateral to bond the validator, giving the validator an incentive to act honestly: risk and return. Perp protocols GMX and Synthetix promoted staking their tokens for the sole reason of artificially reducing the supply.

These tactics are symptomatic of bad faith, not something any investor with other people’s money should trust.

The Perp Funding Rate Mechanism

In traditional futures markets, there are maturity dates where traders can deliver spot to settle their positions. This enables arbitrage if the futures and spot price deviates by more than their relative cost of carry, as one can simultaneously buy one and sell the other and then close the position at maturity for a sure profit. [see footnote below for the standard theory].1

Perpetual swaps have no expiration dates, so there is no delivery. This was necessary when Bitmex created them because the exchange only took Bitcoin; it could not be delivered into ETH or USDC. They proposed a perp premium funding rate mechanism as an alternative to the arbitrage mechanism used on traditional futures markets.

The perp premium is the percent difference between the perp and spot prices.

Perp Premium = PerpPrice / SpotPrice - 1

The standard story is that a bullish market sentiment causes the perpetual contract's price to exceed the spot price. To equilibrate the market, the longs will pay the shorts a funding rate when the perp premium is positive, discouraging longs and encouraging shorts. The logic works the opposite way when the perp premium is negative.

There are many variations in calculating the perp premium and how it translates into an actual overnight rate. For example, the spot price can be taken from an oracle or spot market on the exchange. The perp price used in this calculation has many degrees of freedom, such as the simple mid-price of the best bid and ask or using bid prices given an order of $1000. The perp premium is generally averaged over 8 hours and then paid at the end of the period. Some sample each tick or minute pay every hour or continuously, but these differences are insignificant. These calculations are impossible for an outside auditor to validate given the economic significance of differences within the standard error of these measurements (e.g., a 0.03% higher perp premium raises the annualized funding rate by 11%).

Initially, the perp premium was mapped into a daily rate, which is usually the case today, though occasionally, it is mapped into 8- or 1-hour rates. There are usually max and min rates and a flat zone set at the default funding rate of 10.95%. For example, this is the function for BitMEX.

Presumably, 'bullishness' drives the perp premium, though theoretically, this makes no sense. If people think the price will be higher in the future, it gets reflected in the spot, not the basis (see Sameulson's Law of Iterated Expectations). In any case, to equilibrate bullishness with bearishness, the funding rate incents more shorts and fewer longs, reducing the perp premium. For example, a 0.09% perp premium implies a 33% annualized funding rate added to the shorts and subtracted from the longs (365x0.09). Over a day, that is just a 0.09% return, and your average daily volatility will be around 4.0%, which generates an insignificant reward/risk ratio, especially after trading costs.

Yet the short's funding rate only materializes to any significant degree if that perp premium persists, and the current perp premium is but a single moment in that calculation; the current perp premium might not even be sampled in calculating the average perp premium used in the next 8-hour period's funding rate payment.

In practice, traders look at the historical funding rate premium over the past few days, not the current perp premium. At any point in time, the perp premium does not incent buys and sells as in arbitrage trading, where if the spot price deviates from the futures price, an arbitrageur can instantly lock in a profit.

Arbitrage bounds prices on spot AMMs, and we can see that liquid AMM prices are almost always within the fee of the current world price on the major centralized exchanges. The funding rate mechanism provides a weak simulacrum of futures-spot arbitrage. It is more akin to how the Fed adjusts interest rates to manage the economy. Higher interest rates diminish investment, but it's a very imprecise mechanism that operates on long and variable lags. No economist would assert that the effect of interest rates on investment is like some arbitrage mechanism.

In practice, perp markets operate on a focal point, the current spot (Schelling points, Aumann's correlated equilibria). This makes sense because liquidity providers want to give customers what is promised to keep them returning. Indeed, many perp markets like Synthetix have AMMs that work fine without a perp funding rate pretext, highlighting their redundancy.

On one level, there is nothing wrong with this mechanism. BitMEX introduced it out of a need in the era before wrapping and stablecoins, and they needed a cover story to get traders to trust that their perp markets were 'trustless,' not merely based on focal points. However, given that we now know this perp premium mechanism has never operated like a governor on a steam engine and that it was just a white lie to get a market off the ground, its persistence is an insult. Exchanges, from Binance to BitMEX to DyDx, lie about how funding rates are determined and what they are for. Funding rates do not tie perp prices to spot via arbitrage or the emergent market price. The funding rates are set indirectly by insiders, who set the perp premium to their advantage.

Equilibrium Funding Rate Too High

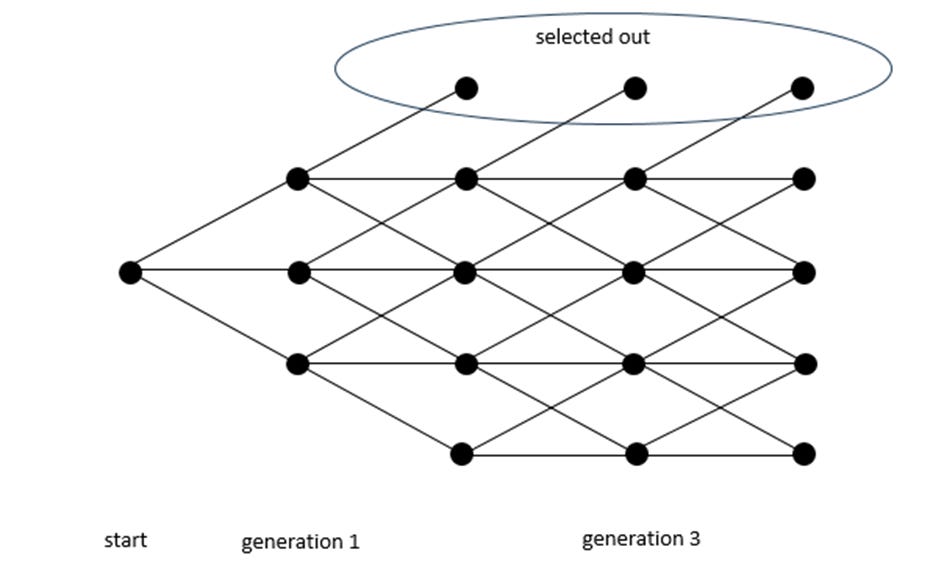

Defi perp traders overwhelmingly want to lever long, not short. This makes sense because, conditional upon having money on blockchains, one is generally bullish; if you are bearish on ETH, you generally do not have anything on the blockchain. For example, the long-short trader open interest on GMX for the Arbitrum blockchain is below. Green is long; red is short.

Long-Short Perp Positions on GMX

There are two sides to every perp trade, which implies perp liquidity providers will be short on average. This is the general equilibrium on perp markets. The funding rate does not bring the trader perp long-short demand into equilibrium.

Most perp dexes either employ or are affiliated with their primary liquidity providers. This makes sense because when starting a market, it helps to seed it with liquidity. This short bias works well for the LPs, as it is easy to hedge their short perp positions on the blockchain with a long position. These LPs naturally want the default funding rate to pay them, the shorts. Working with the perp admins who calculate the nebulously sampled averages perp premiums using 'impact' prices and variously amended price feeds for a spot price, the LPs can target the perp premium to be whatever they can get away with.

Theoretically, the perp funding rate for the ETH-USDC should be around 4%, given the difference between the USDC and the ETH's lending rates on the blockchain is 6 and 2%, respectively. The fact that the default rate for dex perps is 11% highlights the gamed nature of perps. An honest perp exchange would allow users to post ETH as collateral, short that ETH, and collect the funding rate. This would be, at most, the riskless USD rate, 5%. Perp markets do not allow this because it would reduce the returns for their LPs.

The House Money Effect

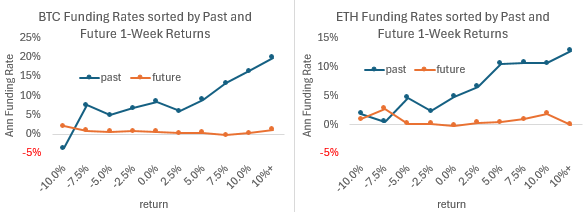

This perp premium farce allows the perp insiders to reap extra returns when traders, who are generally long, are sitting on big profits. This is like when a bettor has won a lot, they have 'house money,' so they don't mind giving a big tip to the dealer or making a frivolous bet. One can see this by looking at the funding rate and the price level, where funding rates often exceed 50%. In traditional financial markets, the primary stylized fact is that price increases are associated with negative financing rates. The crypto pattern is anomalous, reflecting a perp conspiracy that would be provably illegal if in TradFi, even by regulators as competent as the current SEC.

The graphs above make it hard to see which series is leading. If we cross-tab the funding rate with the prior return at a shorter duration, there's a clear positive relation; when cross-tabbed against future returns, there is no relation. Thus, there is no plausible story that the funding rate reflects a risk premium that shows up in future returns.

Academic Pretext

In podcasts about perps, it is common to mention the perp premium mechanism as an ingenious blockchain application of Nobel prize-winning research. Robert Shiller had created a well-known housing price index, and as housing is a major asset class in any economy, he thought having an active futures market would be helpful. The problem is that, unlike a stock index, there is no way to sell houses that underlie a housing index. With 'delivery' out of the question, in 1992 he proposed a futures market that did not have maturity dates for housing futures, a perpetual futures. He used an econometric model to estimate monthly housing rents like a stock's dividends, which would be credited/debited to the daily margin. The futures price would be the market's present value of these rents, just as a stock is the present value of a stock's dividends.

Outside of being a perpetual futures with no fixed delivery date, it had nothing like the perp premium tying the perp price to the spot price via a funding rate. The funding rate was calculated via an exogenous econometric model using macro data.

Adam K. Gehr's 1988 article on the Chinese Gold and Silver Exchange Society of Hong Kong (CGSES) is also commonly mentioned and is a better analog to crypto perp markets. The CGSES had a perpetual futures contract that used a nightly funding rate auction. If the price of Gold closed at $111.0, and the daily interest rate auction was set at $0.15, the closing 'futures' price would be marked at the spot close plus the interest rate, $111.15. Thus, if the long sold at $112.0 the next day, he would make 0.85, using the futures close as the basis.

Gehr suggested that, like in the CGSES, a short separate trading period after the spot close could facilitate perpetual futures, which have the advantage of automatically rolling. The mechanism would be just like in the CGSES, so if the spot price closed at 111.0, the futures market would trade for 15 minutes and set a price at 111.15.

In Gehr's model, the perp premium directly generates a funding rate, but it differs profoundly from the crypto perp approach. The spot market was taken as a given, and the proposed closing perp price was determined after the spot market closed, a direct analog to the overnight funding rate auction used at the CGSES at the end of daily trading. The perp closing price was a simple way to account for the overnight lending rate within the structure of daily margining, using the prior perp close as the cost basis.

It's ignorance or willful deception to assert these papers demonstrate the economic soundness of the perp premium funding rate mechanism.

Perps Aren't Whale-Friendly

For a small trader, the perp conspiracy is not a big deal. They get access to 50x leverage, something not possible for many elsewhere. As many don't mind paying a 50% premium for lottery tickets, the funding rate charge is tolerable because of its easy access to leverage.

For a whale, however, it's a dealbreaker. If a whale wants to be market maker on one of these exchanges, they will never compete if they aren't part of the insider club. If they want to put on a position, they know insider LPs will have the opposite position. In a large hedge fund, a portfolio manager with 5% alpha is considered exceptional, but a big crypto portfolio manager investing in perps is subject to insiders bumping the funding rate to offset any conceivable alpha.

If you think I am being paranoid, consider the case of perp.fi’s virtual AMM, which used rewards to pump their token market cap to $1 billion. While one commenter opined it should be considered for a Nobel Prize, it contained the minor flaw that the LP collective could become insolvent. Eventually, the LP's insolvency was greater than its insurance fund. When this was discussed on various user forums, the chat highlighted animus towards the accounts with large gains, a predictable rationalization when debating whether to renege on a large outstanding debt. For example:

" IMO it would be correct to add the option of not compensating whales like them who didn't bring any value to the protocol, just risks. They didn't do any active trading, didn't generate a lot fees therefore, just reaped funding (keeping neutral positions most likely)."

There are many good reasons to not pay debts to rich people. A trustless, decentralized contract has to eliminate any such discretion.

Decentralized perps can work, but they need an integrity enema to entice the whales needed to flourish. Protocols should emphasize decentralization, transparency, and immutability instead of focusing on creating a closer substitute for centralized exchanges.

The theory that explains the perp funding rate is a typical non-equilibrium story that sounds good at 30k feet, but makes no sense. Like the explanation that there are "more buyers than sellers," the idea that long demand shows up in futures price premiums makes no sense. In 1967, Paul Samuelson demonstrated the law of iterated expectations, which implies current sentiment is reflected in spot prices, not forward/futures prices. Funding rates are instead a function of the relative interest rates of the two assets traded, such as the rate of inerest on the USD and the dividend rate on stocks.

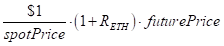

The theory is called ‘covered interest rate parity,’ and works like this. One can take a dollar and earn interest via money markets or Treasury Bills directly which generates:

Alternatively, one can turn this into ETH, earn the ETH lending rate, and then convert back into USD:

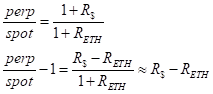

In equilibrium, these two paths generate the same net return. Relabeling futurePrice with perp, and spotPrice with spot, and some rearranging, we get:

Which rearranges further to

Here the perp premium is just the difference in the USD minus the ETH interest rate. In markets there are significant storage costs due to grain wasting, or a lack of storage space for oil during a demand collapse as in March 2020. There are no storage costs in crypto. The USDC and ETH lending rates are approximately 6% and 2% respectively. Thus, in theory,